Chances are, you’ve come across gesture recognition before. If you’ve ever given someone a thumbs up with Apple’s Reactions or played around with Snapchat or TikTok filters, your device was recognizing your gestures in real time.

Gesture detection is quickly going mainstream, making remote conversations feel more natural and engaging. Let’s explore how to detect hand gestures in JavaScript running in the browser and build a simple videoconferencing app with special effects.

What is gesture recognition?

Gesture recognition is a technology that finds and identifies hand gestures in images and video. It’s closely related to pose detection, which identifies and tracks the positions of people in an image or video. What’s more, it’s commonly used to add effects to a video or image source based on the detected gestures.

AI-powered features in videoconferencing

Gesture recognition or gesture control falls into a broader category of videoconferencing features that use AI to enhance the user’s experience. Other common features include:

- background blur

- virtual backgrounds

- automatic transcription

Challenges in real-time gesture recognition

Unfortunately, real-time gesture recognition is hard to implement (especially in the browser!), since it combines multiple traditionally difficult tasks: computer vision, low-latency videoconferencing and real-time video compositing.

Running AI models and low-latency streaming are resource-heavy tasks that rely on a range of advanced browser APIs like Web Workers, WebRTC, WebGL, and, more recently, WebGPU. Video compositing in the browser has been around for a while, but it’s only recently gotten more attention thanks to the new WebCodecs and experimental Insertable Streams APIs.

Implementing gesture recognition in videoconferencing

Now, we’re going to build a simple React app that lets users join video calls and trigger visual effects whenever they make a ‘timeout’ gesture — because sometimes, a conversation just needs a reset.

To build this app, we need to pick the right tools for the job. There are three key challenges to solve, which we’ll break down below.

Detecting gestures in real-time

To detect gestures in real-time, we’re going to use MediaPipe for its hand landmark detection model. They also have a ready-to-go gesture recognition model, but it doesn’t fit our specific use case. For other AI-powered features, you may want to look at Tensorflow.js and Transformers.js.

Video compositing in real-time

To render video effects, we’re going to use Smelter for its speed and simple component-based API.

It’s written in Rust and primarily designed for server-side compositing, but thanks to a new WASM build, it can now run entirely in the browser. At the moment, this build works only in Chrome and Safari, though we’re actively working on support for other popular browsers. If you need to target every platform, your safest bet is the built-in HTML Canvas API, but beware, there be dragons.

Real-time communication

This is the backbone of our videoconferencing application. The collection of protocols that allows us to achieve low-latency videoconferencing is called WebRTC. Implementating WebRTC manually requires a lot of development time and infrastructure, so we’re going to use Fishjam (a live streaming and video conferencing API), because its room manager allows us to prototype for free, without our own backend server.

Obtaining the camera’s video stream

To start detecting gestures, we need to obtain the video stream from our device’s camera. Normally, we would call the built-in getUserMedia() to get a MediaStream, but we’re going to use Fishjam’s useCamera hook, which integrates the above API with the React lifecycle:

export default function App() {

...

const { cameraStream } = useCamera();

...

}Detecting gestures with MediaPipe

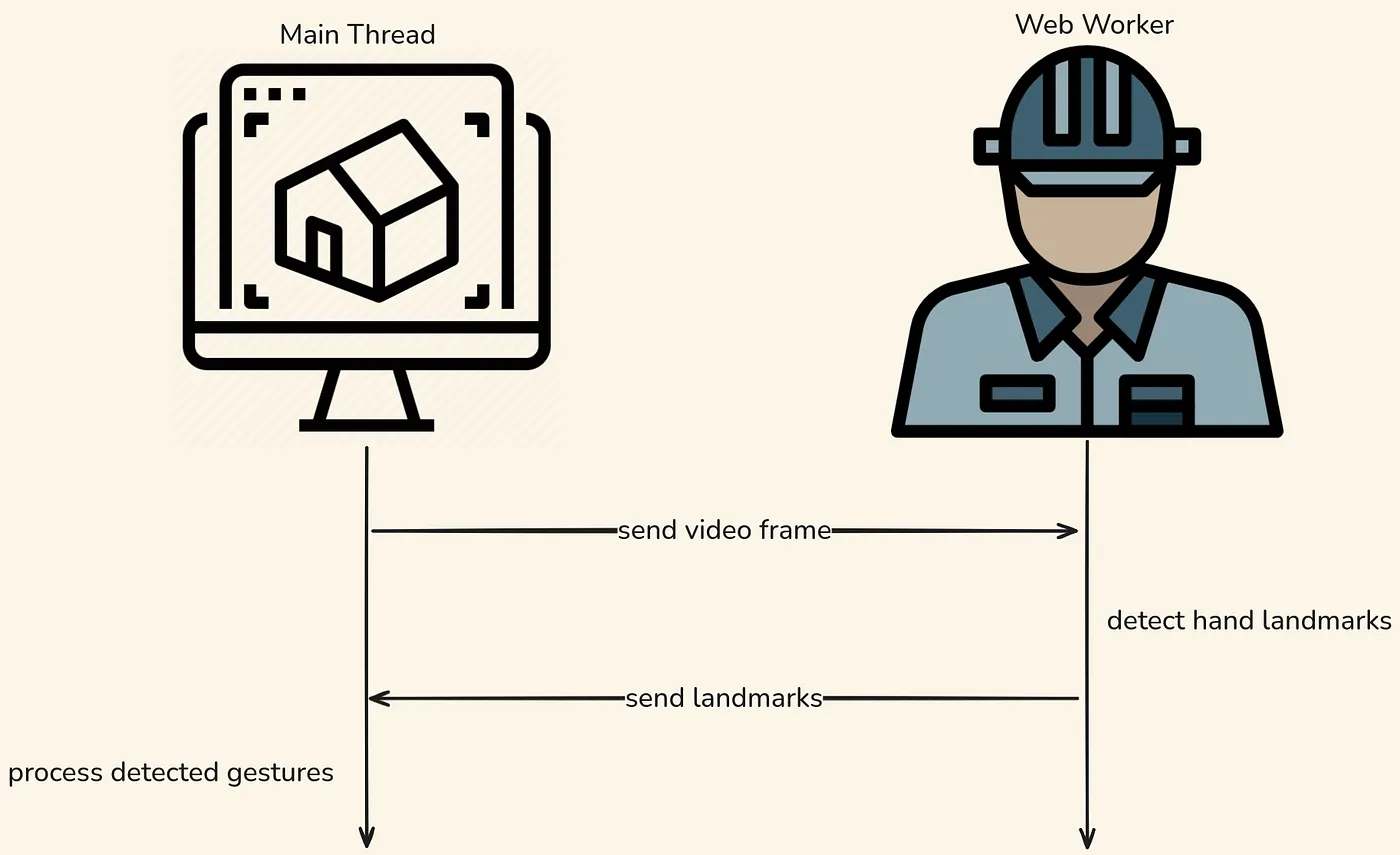

Gesture recognition is the most resource-intensive part of our app, so we’re going to have to be careful with how and where we run it. To prevent blocking the main thread when running the hand landmark detection model, we’re going to use Web Worker to run the detections asynchronously.

Below, you’ll find the complete code for the Web Worker. It receives messages containing VideoFrame objects and replies with any hand landmarks it detects.

// worker.js

let landmarker;

const init = async () => {

const { FilesetResolver, HandLandmarker } = await import(

"@mediapipe/tasks-vision"

);

// load the correct WASM bundle

const vision = await FilesetResolver.forVisionTasks(

"https://cdn.jsdelivr.net/npm/@mediapipe/tasks-vision@latest/wasm",

);

landmarker = await HandLandmarker.createFromOptions(vision, {

baseOptions: {

modelAssetPath:

"https://storage.googleapis.com/mediapipe-models/hand_landmarker/hand_landmarker/float16/latest/hand_landmarker.task",

},

runningMode: "VIDEO",

numHands: 2,

});

};

init();

self.onmessage = ({ data: { frame } }) => {

const detections = landmarker?.detectForVideo(frame, frame.timestamp);

frame.close();

postMessage(detections?.landmarks ?? []);

};Note that we set runningMode: "VIDEO" to benefit from hand tracking, which improves the accuracy of hand detections. We also need to set numHands: 2, as we need to detect 2 hands, while the default value is 1.

The worker on its own isn’t very useful, we also need to capture the camera’s MediaStream and send frames to it from the main thread.

// GestureDetector.ts

export type HandGesture = "NONE" | "TIMEOUT";

export class GestureDetector {

private video: HTMLVideoElement;

private prevTime: number = 0;

private closing: boolean = false;

private worker: Worker;

constructor(

stream: MediaStream,

detectionCallback: (gesture: HandGesture) => void,

) {

this.video = document.createElement("video");

this.video.srcObject = stream;

this.video.play();

// start the Web Worker

this.worker = new Worker(new URL("./worker.js", import.meta.url));

// callback to run when worker responds with landmarks

this.worker.onmessage = ({ data }) => {

detectionCallback(findGesture(data));

this.video.requestVideoFrameCallback(() => this.detect());

};

// begin the gesture detection loop

this.video.requestVideoFrameCallback(() => this.detect());

}

detect() {

if (this.closing) return;

const currentTime = this.video.currentTime;

// check if the video has advanced forward

if (this.prevTime >= currentTime) {

this.video.requestVideoFrameCallback(() => this.detect());

return;

}

this.prevTime = currentTime;

const frame = new VideoFrame(this.video);

this.worker.postMessage({ frame }, [frame]);

}

close() {

this.closing = true;

this.video.remove();

this.worker.terminate();

}

}The above code does a few things:

- It creates a

<video>element that will handle playback of theMediaStream. - It starts the Web Worker that will run the MediaPipe model in the background.

- It begins the gesture detection loop, by repeatedly calling

requestVideoFrameCallback(), which allows us to run code when the video frame changes.

Recognizing the “timeout” gesture from hand landmarks

In the GestureDetector implementation, we use a seemingly magical function called findGesture(), which takes hand landmarks and returns a gesture. But in reality, the function is quite simple; it just checks four things:

- Are all fingers straight?

- Are the fingers of each hand pointing in the same direction?

- Are the hands positioned perpendicular to each other?

- Is the tip of the middle finger on one hand placed in the palm of the other?

If the answer to all four questions is yes, then it’s a clear ‘timeout’ gesture! If you want to know the specifics of how to check the above conditions, then you can check out the demo’s source code.

Lastly, we’re going to integrate GestureDetector with the React lifecycle by creating a useGesture hook:

export const useGesture = (stream: MediaStream | null) => {

const [gesture, setGesture] = useState<HandGesture>("NONE");

useEffect(() => {

if (!stream) return;

const detector = new GestureDetector(stream, setGesture);

return () => {

detector.close();

setGesture("NONE");

};

}, [stream]);

return gesture;

};Adding effects to the video stream

The last thing we need to do is trigger an effect when we detect the"TIMEOUT" gesture. As shown in the GIF below, we want some text to slide in, pause, and then slide out.

To add effects to the camera stream, we need to register it as an input with Smelter:

const { cameraStream } = useCamera();

...

await smelter.registerInput("cameraStream", {

type: "stream",

stream: cameraStream.clone(),

});Now that we have the input, we need to set up an output to tell Smelter what to do with it:

const { stream: output } = await smelter.registerOutput(

"modifiedCamera",

<VideoWithEffects

stream={cameraStream}

inputId="cameraStream"

/>,

{

type: "stream",

video: { resolution: { width: 1280, height: 720 } },

},

);

// we can now use output to interact with the modified stream

// e.g. we can tell Fishjam to send the modified stream to others

import { useCustomSource } from "@fishjam-cloud/react-client";

const { setStream } = useCustomSource("custom-camera");

...

setStream(output);Note that smelter.registerOutput() takes 3 arguments:

- The ID of the output

- The layout of the output

- The options of the output, most notably the type (this can be “stream”, “whip” or “canvas”) and resolution.

The layout is the most interesting part, since it can be a React component, which means the output video layout can be reactive. We’re going to make use of this in our <VideoWithEffects> component:

// VideoWithEffects.tsx

export type VideoWithEffectsProps = {

stream: MediaStream;

inputId: string;

};

const DURATION = 5000;

export default function VideoWithEffects({

stream,

inputId,

}: VideoWithEffectsProps) {

const gesture = useGesture(stream);

const [animating, setAnimating] = useState(false);

useEffect(() => {

if (gesture === "TIMEOUT" && !animating) {

// start the animation

setAnimating(true);

// reset the flag when the animation is done

setTimeout(() => setAnimating(false), DURATION + 500);

}

}, [gesture, animating]);

return (

<View>

<Rescaler>

<InputStream inputId={inputId} />

</Rescaler>

{animating && (

<Animation duration={DURATION} />

)}

</View>

);

}The components <View> and <Rescaler> are baked into the Smelter Typescript SDK, which has a lot of utilities for creating layouts. The layout is reactive thanks to the useGesture() hook, which allows us to render an <Animation> whenever a gesture is recognized. A simple example implementation of <Animation> is described below:

// Animation.tsx

export type AnimationProps = {

duration: number;

};

type AnimationState = "before" | "pause" | "after";

const START_DELAY = 100;

const WIDTH = 1280;

export default function Animation({ duration }) {

const [animationState, setAnimationState] =

useState<AnimationState>("before");

const durationMs = (duration - START_DELAY) / 3;

// slide in from the right and out to the left

const right = useMemo(() => {

switch (animationState) {

case "before":

return WIDTH;

case "pause":

return 0;

default:

return -2 * WIDTH;

}

}, [animationState]);

useEffect(() => {

setTimeout(() => {

setAnimationState("pause");

setTimeout(() => setAnimationState("after"), 2 * durationMs);

}, START_DELAY);

}, [durationMs]);

return (

<View style={{ top: 0, left: 0 }}>

<Rescaler

style={{ bottom: 0, right }}

transition={{ durationMs, easingFunction: "bounce" }}

>

<Image source="/assets/timeout-text.gif" />

</Rescaler>

</View>

);

}Try it out yourself

We’ve covered the core components needed to implement gesture recognition in TypeScript, running right in the browser. If you want to see the full example in action, make sure to check out our hosted demo, or its source code on GitHub. If you’re working on AI-based features with real-time video and you need further help, reach out to us on Discord.

Closing remarks

Real-time gesture recognition isn’t without its challenges, but thanks to tools like MediaPipe, Fishjam and Smelter, it’s getting a whole lot easier, especially on the web. And with powerful solutions like Insertable Streams API becoming more widely available, the future of browser-based video effects looks really promising.

We’re Software Mansion: multimedia experts, AI explorers, React Native core contributors, community builders, and software development consultants.